A UK-based child protection group claims Apple is underreporting the number of abuse images on Apple devices in the UK. The organization says it has found more cases of abuse images on Apple platforms in the UK than Apple has reported globally.

The UK’s National Society for the Prevention of Cruelty to Children (NSPCC) claims Apple is vastly undercounting incidents of Child Sexual Abuse Material (CSAM) in services such as iCloud, FaceTime, and iMessage, according to a report by The Guardian. All US technology firms are required to report detected cases of CSAM to the National Center for Missing & Exploited Children (NCMEC). Apple made 267 such reports in 2023.

However, the NSPCC has independently investigated and has found out that Apple was implicated in 337 offenses between April 2022 and March 2023, and that was in England and Wales alone.

“There is a concerning discrepancy between the number of UK child abuse image crimes taking place on Apple’s services and the almost negligible number of global reports of abuse content they make to authorities,” Richard Collard, head of child safety online policy at the NSPCC said. “Apple is clearly behind many of their peers in tackling child sexual abuse when all tech firms should be investing in safety and preparing for the rollout of the Online Safety Act in the UK.”

Google reported over 1,470,958 cases in 2023. During the same period, 17,838,422 cases were reported on Facebook, and 11,430,007 on Instagram.

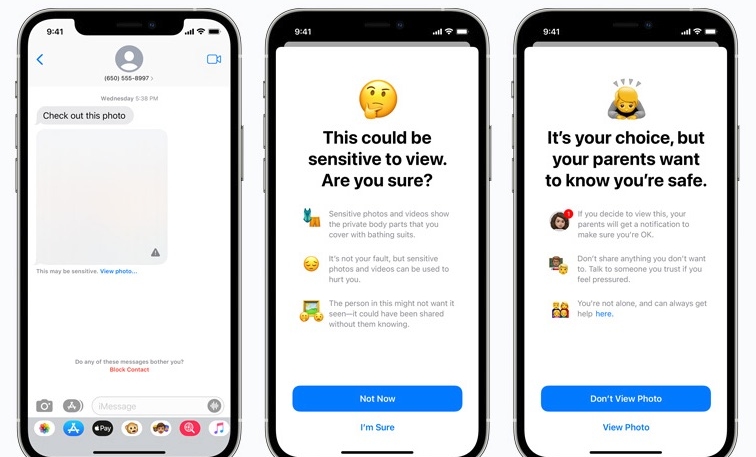

Apple is unable to see the contents of users’ iMessages, as it is an end-to-end encrypted service. However, the NCMEC explains that WhatsApp is also encrypted, yet Meta reported around 1,389,618 suspected CSAM cases in 2023.