Apple has announced a number of new accessibility features that are on the way to the iPhone, iPad, Apple Watch, and Mac later this year. The Cupertino firm says the new features will bring additional ways for users with disabilities to “navigate, connect, and get the most out of Apple products.”

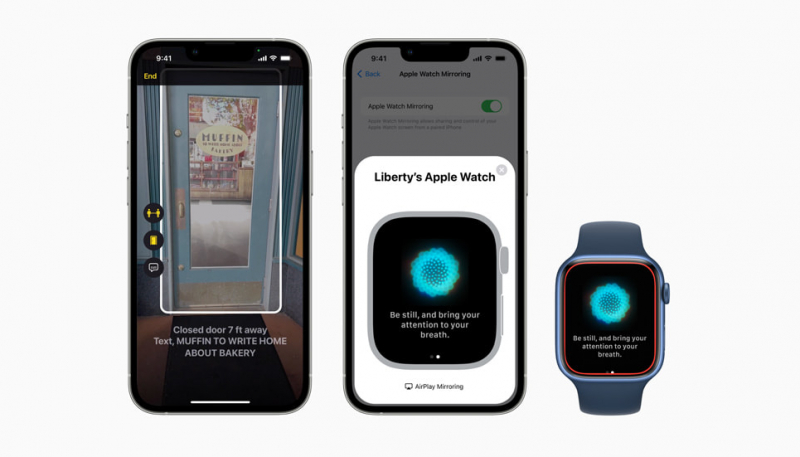

Blind or low-vision iPhone and iPad users will be able to take advantage of a new feature called Door Detection, which can help find a door when arriving at a new destination, providing information about how far they are from the door, whether it is open or closed, and how the door can be opened by pushing, pulling, turning a knob, or pulling a handle.

The feature can also read signs and symbols around a door, such as room numbers or the presence of an accessible entrance symbol. The Door Detection feature uses a combination of LiDAR, your iPhone or iPad’s camera, and machine learning to pull off this tech feat.

Apple Watch, users will benefit from a new Apple Watch Mirroring feature that Apple describes as a way to make the Apple Watch “more accessible than ever for people with physical and motor disabilities.”

Apple Watch Mirroring allows users to control their Apple Watch using iPhone’s assistive features like Voice Control and Switch Control, allowing them to use inputs including voice commands, sound actions, head tracking, or external Made for iPhone switches as an alternative to tapping the Apple Watch display.

Quick Actions is also coming to Apple Watch:

With new Quick Actions on Apple Watch, a double-pinch gesture can answer or end a phone call, dismiss a notification, take a photo, play or pause media in the Now Playing app, and start, pause, or resume a workout. This builds on the innovative technology used in AssistiveTouch on Apple Watch, which gives users with upper body limb differences the option to control Apple Watch with gestures like a pinch or a clench without having to tap the display.

Apple has announced Live Captions for iPhone, iPad, and Mac which should be a help to the deaf and hard of hearing users. The feature captions audio content in real-time, including on FaceTime calls, other social media or video apps, streaming media content, or even “having a conversation with someone next to them.”

Live Captions in FaceTime attribute auto-transcribed dialogue to call participants, so group video calls become even more convenient for users with hearing disabilities. When Live Captions are used for calls on Mac, users have the option to type a response and have it spoken aloud in real time to others who are part of the conversation. And because Live Captions are generated on device, user information stays private and secure.

Other new Accessibility features announced by Apple today include:

- With Buddy Controller, users can ask a care provider or friend to help them play a game; Buddy Controller combines any two game controllers into one, so multiple controllers can drive the input for a single player.

- With Siri Pause Time, users with speech disabilities can adjust how long Siri waits before responding to a request.

- Voice Control Spelling Mode gives users the option to dictate custom spellings using letter-by-letter input.5

- Sound Recognition can be customized to recognize sounds that are specific to a person’s environment, like their home’s unique alarm, doorbell, or appliances.

- The Apple Books app will offer new themes, and introduce customization options such as bolding text and adjusting line, character, and word spacing for an even more accessible reading experience.

These features will be coming our way in software updates later this year, Apple says. For more information, read the company’s press release.