Apple employees are reportedly joining outsiders in raising concerns over the Cupertino firm’s plans to begin scanning iPhone users’ photo libraries for child sexual abuse material (CSAM). The employees are said to be speaking out internally about how the technology could be misused by scanning for other types of content.

A report from Reuters says an unspecified number of Apple employees have taken to internal Slack channels to raise concerns over CSAM detection. Employees are sharing their concerns that governments could force Apple to use the technology for finding other types of content, leading to censorship. Employees are expressing concerns that Apple is damaging its stellar privacy reputation.

Apple employees have flooded an Apple internal Slack channel with more than 800 messages on the plan announced a week ago, workers who asked not to be identified told Reuters. Many expressed worries that the feature could be exploited by repressive governments looking to find other material for censorship or arrests, according to workers who saw the days-long thread.

Past security changes at Apple have also prompted concern among employees, but the volume and duration of the new debate is surprising, the workers said. Some posters worried that Apple is damaging its leading reputation for protecting privacy.

Apple employees in user security roles are not believed to have been part of the internal protest, says the report.

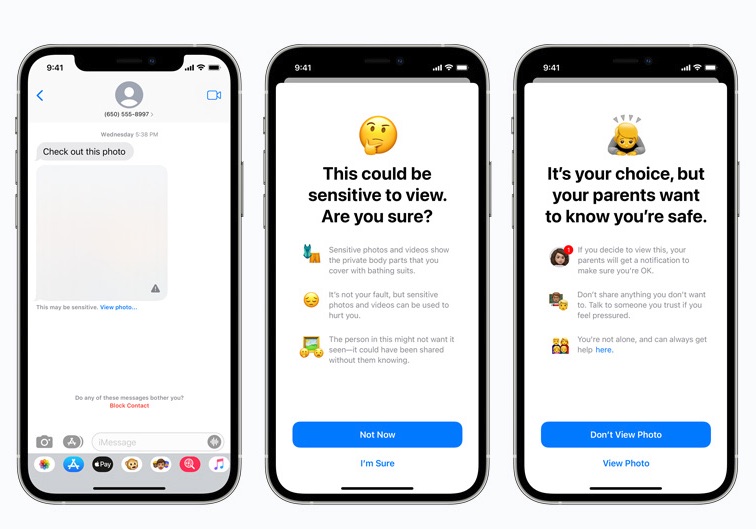

Apple announced last week that it would soon begin scanning iPhone users’ photo libraries for CSAM materials alongside the release of iOS 15 and iPadOS 15 later this year, and was soon hit with a barrage of criticism. Most critics have expressed concern that the technology could be used by governments to oppress their citizens.

For its part, Apple has said that it will refuse any such governmental demands.

Could governments force Apple to add non-CSAM images to the hash list?

Apple will refuse any such demands. Apple’s CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC and other child safety groups. We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future. Let us be clear, this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government’s request to expand it. Furthermore, Apple conducts human review before making a report to NCMEC. In a case where the system flags photos that do not match known CSAM images, the account would not be disabled and no report would be filed to NCMEC.

An open letter calls upon Apple to immediately halt its plan to deploy CSAM detection and has gained nearly 8,000 signatures at the time of this article.