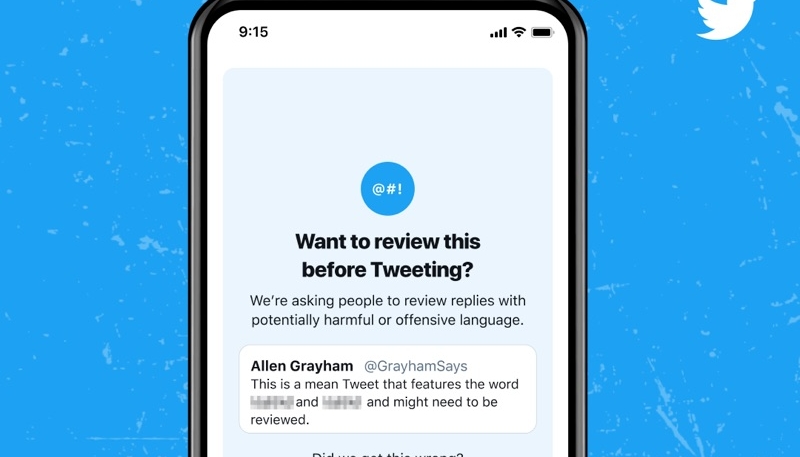

After one year of testing, Twitter is launching an offensive tweet warning feature for iOS and Android users that will encourage people to pause and reconsider a potentially “mean,” harmful, or offensive reply before they hit send.

The feature is currently enabled for all accounts that are set to English in their language settings and is currently rolling out for iOS and Android.

“We began testing prompts last year that encouraged people to pause and reconsider a potentially harmful or offensive reply — such as insults, strong language, or hateful remarks — before Tweeting it. Once prompted, people had an opportunity to take a moment and make edits, delete, or send the reply as is.”

Twitter says the prompts resulted in people sending “less potentially offensive replies across the service:”

- If prompted, 34% of people revised their initial reply or decided not to send their reply at all.

- After being prompted once, people composed, on average, 11% fewer offensive replies in the future.

- If prompted, people were less likely to receive offensive and harmful replies back.

Twitter incorporated these prompts into the system as follows:

- Consideration of the nature of the relationship between the author and replier, including how often they interact. For example, if two accounts follow and reply to each other often, there’s a higher likelihood that they have a better understanding of the preferred tone of communication.

- Adjustments to our technology to better account for situations in which language may be reclaimed by underrepresented communities and used in non-harmful ways.

- Improvement to our technology to more accurately detect strong language, including profanity.

- Created an easier way for people to let us know if they found the prompt helpful or relevant.